Read time: 7 minutes

The Assessment Network at Cambridge recently conducted a survey of over 200 assessment and education professionals from across sectors and global regions to find out more about their training and development needs. Over 70% of survey respondents said that ‘AI and changes to the digital landscape’ were the biggest challenges they felt they were facing - both now and in the future.

At The Assessment Network we believe in bringing together powerful knowledge and insights from assessment professionals across sectors, so we invited a range of diverse speakers to our Assessment Horizons conference in April to reflect on some key themes in this growing area of discussion. We hoped to help answer some of the questions in the survey, including:

- “How can we prevent malpractice becoming a huge issue with the onset of AI?”

- “How can AI transform the design of assessment items for our respective academic subjects?”

- “What are the specific AI literacy skills that we should impart to our students such that it prepares them into workforce?”

So how do we meet these challenges as an assessment community?

Creating robust assessments in the age of AI

Stakeholders in Higher Education have had to respond rapidly to changes brought by the emergence of large language models for mainstream use.

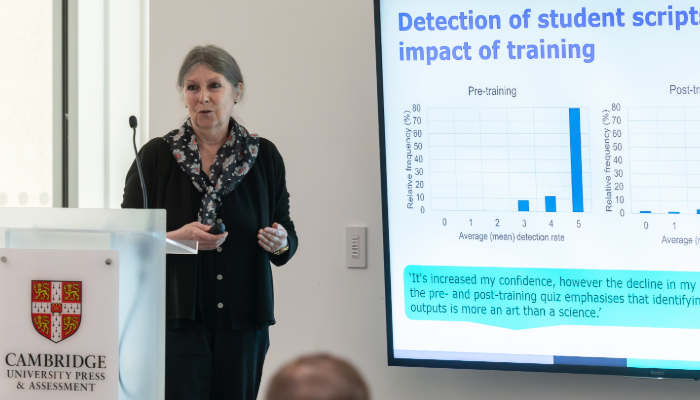

At Assessment Horizons we were joined by Jonquil Lowe from The Open University and Gray Mytton from NCFE, who shared findings from their research project on training exam markers to spot use of generative AI in assessments. The project identified some ‘hallmarks' of Gen AI use, including:

- Discussion that is so balanced that no particular opinion or conclusion is offered

- Failure to use specific module materials but reliance on external sources

- Lack of close textual analysis and supporting quotations

However, one of the issues with training examiners to identify AI use in marking assessments was the markers finding 'false positives'. Jonquil also advised that the fieldwork for this project was done a year ago and the rapid acceleration of development with LLMs means that this type of intervention is a “moving target”.

Jonquil said: “The research suggests that trying to detect misuse of generative AI is not effective. Instead, what we learn about Gen AI can help us design more robust questions and marking guidance that focus on assessing the added value that humans bring to a task."

Jonquil said: “The research suggests that trying to detect misuse of generative AI is not effective. Instead, what we learn about Gen AI can help us design more robust questions and marking guidance that focus on assessing the added value that humans bring to a task."

The research report advised assessment designers to focus on risk-assessing the most vulnerable question types in exams and assignments where generative AI is likely to perform well.

Finally, they suggested that where students were found to have used AI for malpractice or plagiarism, this could be addressed with greater focus on the study skills of the students and to address more ethical use of LLMs as a form of study support. They highlighted a need for greater AI understanding amongst the student population, as Jonquil concluded:

“We do need to prepare students for the future, both by developing their critical AI literacy and the implications of using AI so we can support them to use it ethically and effectively.”

AI and assessment literacy – led by the student voice

A team from University College Dublin answered that need with reflections on a unique podcasting project that empowered both AI and assessment literacy amongst students at UCD’s College of Engineering and Architecture.

Associate Professor Jennifer Keenahan explained the impetus for the project: “We noticed that the students voice was largely absent from the conversation on AI and assessment. There was lots of debate amongst practitioners and academics, but very little on students’ opinions. But we know from academic literature that listening to the student’s voice leads to better outcomes not just for themselves but also for the University as a whole.” A podcasting project was chosen as it seemed the perfect medium to elevate and share these voices.

A team of engaged first year students investigated AI's impact across six different assessment types: closed-book exams, lab reports, online exams, group projects, reflective assignments, and individual assignments. A variety of future-orientated insights surfaced from the students during the 'Bridges and Bytes - The student voice on AI and assessment' series. These included:

- “The calculator approach” – will there come a time when every student walks into an exam hall with an AI terminal to use as Engineering students currently do with a calculator?

- Prompt Engineering Exams – where students are assessed on their prompting skills

- Students themselves assessing the accuracy of AI outputs

Fourth year student Amy Lawrence inspired the audience with her own reflections from taking part in the project. She argued that “Curiosity, our criticism, and our creative thinking are three defences against the kind of AI usage that harms our education”, asking the education community:

“How can you deliver content to your students in a way that excites their passion and their curiosity, such that they feel compelled to express their own thoughts? How can you challenge your students to critically analyse the outputs that they get from their AI tools?  And how can you reward resourcefulness and innovation and creativity in your students when they solve a problem in a new and more efficient way using AI?”

And how can you reward resourcefulness and innovation and creativity in your students when they solve a problem in a new and more efficient way using AI?”

“There's still a huge opportunity, and I believe imperative for us all to take a step towards understanding AI and holding each other to the standard of using it responsibly… I think the biggest danger of AI use is the mindless acceptance of its outputs. As educators, I certainly think it is your place to start rallying against this.”

Simon Child, Head of Assessment Training at The Assessment Network commented: “This project represents the intersection of AI and assessment literacy – and demonstrates the value for learners and educators in getting to grips with both. Through experimenting with AI tools and understanding the underpinning principles of good assessment, we all put ourselves in a stronger position to look to the future as an assessment community dedicated to getting it right for our learners.”

AI for marking and non-exam assessment

Elsewhere at Assessment Horizons, considerations around AI and digital developments were being explored in other sessions. Tony Leech, a Senior Researcher at OCR led a session on non-exam assessment and AI.

Tony invited delegates to consider aspects around the constructs in non-exam assessment, and highlighted OCR’s recent Striking the Balance report, which advocates for a shift away from asking simply whether AI was used, and towards how it was used. He discussed recent and ongoing OCR research into the extent of malpractice, ways to detect it, and how teachers currently authenticate candidate NEA work as their own.

Tony spoke about one approach to understanding regulations and practice around AI use in terms of “secure lanes,” where AI use is strictly controlled, and “open lanes,” where restrictions are unenforceable, prompting debate on assessment validity. Ultimately, Tony argued that by getting the balance of AI use right, “we can have assessments that are future focused and relevant to the workplace and to higher education and so forth, but also really well designed, inclusive, supportive to candidates, and valuable.”

Meanwhile Tom Sutch, Principal Data Scientist and Research lead at Cambridge shared insights on his research into how AI can be used to mark low stakes short answer questions. He showed that while deep learning models like ‘BERT’ can achieve accuracy levels comparable to human examiners, although these models are unable to explain marking decisions in the same way as a human.

He also highlighted the need to ensure models attend to relevant response features without unintended biases like language patterns or punctuation. Tom’s session showed that continuous monitoring is still essential to maintain validity and equity in automated assessment methods.

As we as a community become more AI literate, our perceptions of its limitations and uses evolve. This was borne out in a session led by Andrew Field and Natalia Sobrino-Saeb from Cambridge International’s Impact and Ideation team. They shared findings of teacher’s evolving attitudes to AI from 2023-2025. Teacher’s attitudes now are looking markedly more positive than they were two years ago, as they embrace the time saving opportunities it brings:

“Teachers are also using AI to save time on tasks like grading, so that we can focus more on students...Overall, AI is becoming a helpful tool in our classrooms.”

Primary school teacher, Ghana

Looking ahead as a community

As Jonquil acknowledged in her the first keynote at Assessment Horizons, since the time of their research project ChatGPT has already gone through several iterations and as the capability of these language models expand, so too do the potential for both opportunities and challenges for stakeholders in assessment.

Reflecting on the conference sessions, James Beadle, Senior Professional Development Manager at The Assessment Network said:

“Assessment professionals are increasingly having to make decisions about if, and how, AI should be incorporated into their assessments, from facilitating or even encouraging student use, to considering whether AI models can be used for item writing and marking purposes.

These decisions are often inherently complex: AI tools can potentially allow students to generate work that does not reflect an understanding of the construct of concern, however by excluding them, do we then run the risk that our assessment tasks ARE becoming increasingly divorced from how similar activities are carried out in day-day professional activities?

At The Assessment Network, we believe in the importance of supporting key stakeholders in making informed decisions about their assessments, and we are delighted to be now beginning to do this in the area of AI."

Members of The Assessment Network have access to all the recordings and presentations from this year's conference. Learn more

Illustration: Rebecca Osborne